This post signifies 2 very rare things. The first one obviously being the frequency of the post. Normally I aim for 1 post per year and it's a cumulation of my thoughts and events over the past year.

This time, you get to tag along with how I approach a project and execute things. Consider yourself lucky. Since this will be a more technical post, I will try to keep my jokes limited.

I almost wrote "academic" post and had to throw up. It is definitely not that, and it's better that way. I would stick with technical.

(Edit - The barrage of jokes overpowered my will to ignore them. Enjoy)

Some backstory is needed here.

|

| Thermal Image of electrically heated carbon fiber stitched on a base fabric |

Since I work as a HiWi or a "Wissenschaftliche Hilfskraft" and it is one of the very few things keeping me sane, I like to take on various projects and topics and work on them. One of the projects that I have been involved in primarily was the so-called "Infrastick" project. The name comes from the amalgamation of infrared and stickmaschine or stitching machines. The base idea was to integrate elements that emit infrared into the textile base layer so that these fabrics can be used as a heating source in various applications.

The initial idea was to use it as an awning for shops outside since if you have been anywhere in Europe, you know how much Europeans appreciate sitting outside in the narrow alleys and drink beer and Kaffee und Kuchen. Instead of big bulky gas heaters, the idea was to use IR-embedded awnings to provide heat to the guests.

|

| Not my image, just something we had in the original proposal for the project |

I was skeptical of this idea mainly because of the power levels involved if you want to heat humans. there is 100W heat loss from the humans themselves, so you have to at least reach 100W of radiant power being absorbed by the human. And because it's radiant heat, the inverse square law really gives you a challenge because that means that for a reasonable heating distance, you already are looking at 1000W of radiant heat source.

|

| Unrelated |

But, you don't have to just take my word for it. There was a bachelor thesis and also a research paper that was published by our group which goes into detail of how exactly we came to all the conclusions that we did. (Sadly my name is not there in the research paper, because... politics? Aber egal.)

In summary, we picked a commercial IR room heater solution, a fabric-based heater, and the good old tungsten IR lamp, all rated at 1000W of power, built a test bench, defined the DoE (Design of Experiments) on how to do the tests, what relevant values are to be measured from the test bench, design of the test bench itself, design of the electronics and the code, and then finally the data analysis.

|

| Example Circuit Fritzing |

As you can see, it's not a very complicated thing for the data logger. Arduino with 2 MLX90614 Infrared point sensors, and a simple MAX6675 K type thermocouple combo. I had to use the I2C expander because the IR non-contact sensors had the same fixed I2C address, and the LCD I used was also I2C. The Arduino Nano only has a single I2C bus.

The test bench was a stand with a defined distance between the heating sources (one at a time) and a layer of fabric because I assume, and hope, that any people using such a heater will have clothes on. The IR non-contact sensors recorded the temperatures of the heat source and the heat sink, while the thermocouple recorded the ambient temperature, so we can do somewhat scientific comparisons between the tests.

The values were recorded over in Excel using the surprisingly easy-to-use serial plugin for MS Excel. Gives us pretty to look at graphs.

If you are interested in more details, you can go through the research paper that was published, or you can also of course contact me. Ultimately based on our tests, we realized that we needed to pivot.

|

| Unrelated v2 |

So now we (Mostly I) are focused on repurposing the knowledge we gained from all these tests and are developing essentially an office partitioning system that has integrated heating in it as well. And because that is pedestrian and not fancy enough, we are also adding sensors and *AI* into the partition so that it's...

|

| Concept image generated using Blackforestlab's Flux Schnell |

*clears throat...*

Presenting to you, the smart office partitioning system with integrated heating. Keep your workplace looking crisp and sharp, while also making it comfortable for the people working in the office with intelligently placed infrared heating cables in the fabric itself. Even better, the smart office partition comes with sensors that are capable of doing human presence detection and will not only turn on the heating when there is a human in the room, but actually only turn on the heating in ONLY that specific area, thus limiting power use, increasing cost savings, and thus, making the world a better place, by not only optimizing the human comfort but also saving energy and thus saving the planet while doing so. Obviously, there will be an app to control the heating remotely (it's a smart heater after all), setting personal profiles, timers, and additional features, for only $2.99 monthly.

I'll stop.

Well, so far I have focused more on the physical properties of the product. Because we are using carbon fiber to produce infrared radiation, they need to be specced properly. Here is a list of things that we had to consider just to design the heating-embedded fabric.

- Size of the fabric

- Total power output expected

- Number of zones for heating

- Operating voltage levels

- Surface temperature of the fabric

- Types of heat-resistant fabric best suited for the application

- Types of carbon fiber best suited for the application

- Best pattern of carbon fiber based on power requirements and voltage limitations

- The best type of carbon fiber *resistivity* for the shortlisted requirement

Keep in mind that this is just the physical requirements list, no electronics here whatsoever, and they are all intertwined with each other. For example, if the carbon fiber we use is thicker, the resistance might be lower, which means for the same length and the same voltage, the heat output would be much larger, and that might not be safe for either the humans, or the fabric, or both. So there needs to be a carefully choreographed balance between all these considerations.

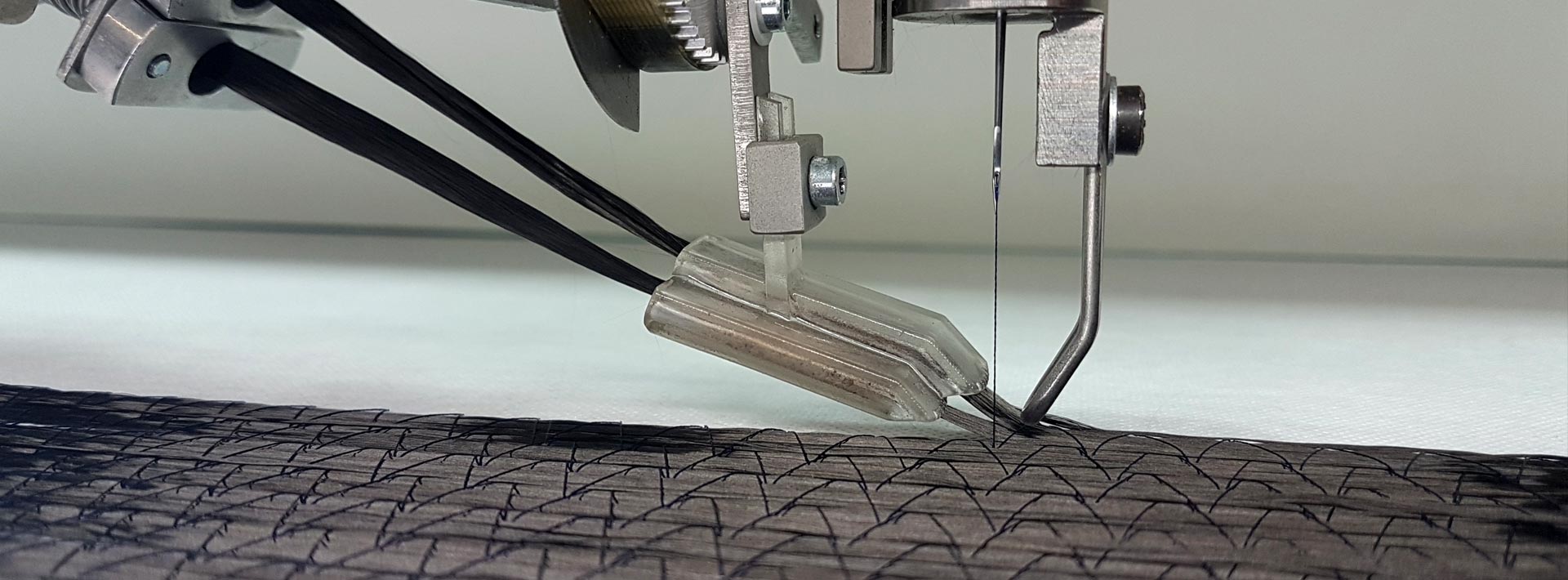

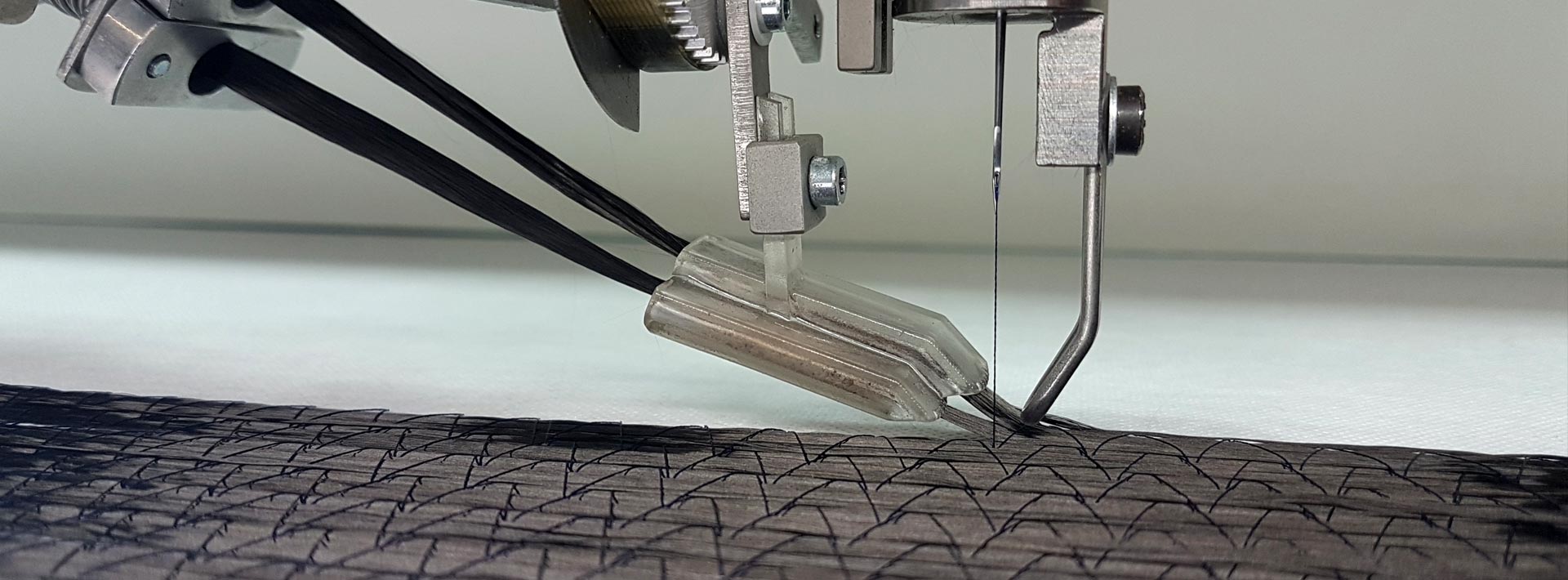

|

| TFP machine from ZSK |

|

| Basic operation of TFP |

Well, yours truly did all that, and now we are finally moving on to the electronics and the *AI* part.

And yes, of course, I cannot directly share our calculations with you, but if you are not AI yourself, you can pretty easily see where we are going with the design in general.

So... now that the background is complete, let's move on...

The problem at hand is to do human detection. Avid readers of this blog (of which there are none, let's be real here) will remember that I was facing the same quandary almost 10 years before when I was trying to automate things in my room. I wanted to make sensors and software in such a way that my system knew when I was in the room, when I was on my desk, or when I was in my bed and set the lights and fans and whatever accordingly.

Thankfully, in the last 10 years, a lot has changed. Tech-aware people will already know about human presence sensors that you can buy for your smart home and do smart things with them. Home-assistant is a thing after all. Most of these human presence sensors work on the principles of Radar sensors, operating in the 24-60ghz range, and can detect not only if there is a human, but also where in the room is the human. That is pretty cool.

|

Radar module from SeedStudio

I wanna try them later someday as well |

I, however, picked the even simpler route. Thanks to the same advancement of technology, you can also pick a thermal imaging module for Arduino. And not just the single-pixel sensor like our trusty MLX90614.

Say hello to the Omron D6T-32L-01A. This is a 32x32 pixel thermopile MEMS sensor. Basically a 32x32 pixel camera that instead of looking at visible light, looks at the Infrared radiation being emitted by the objects, and is precise to 0.1C from the factory itself. Further calibration can improve results even more. How cool is that?

So now, the problem is how to use this fancy sensor to do human detection? Ideas?

Well, let's go one step at a time. The first question is can I even get the sensor to work? Let's not mince words here, most of the programming work I have done is on sensors that have guides already available on how to use them with Arduino, and also libraries. This is a specialty sensor, and you can see it in the price, and thus you will not find last-minute engineer articles about how to use this.

Thankfully, Omron is kind enough to provide a sample code on their Github to get us up and running. And here is the very first problem already. The specific variant of the sensor I picked, the 32x32 pixel one, is sitting out too much data for Arduino Uno or even Mega to handle. So I need to use one of the modern Arduino or switch over to ESP32 or a Raspberry Pi. Out of all these 3, I am most familiar with Arduni, then Rpi, and then the ESP32. So I tried the Raspberry Pi first. And ran into my old enemy C.

|

| How the sensor is constructed |

I used to take classes of C... maybe 15 years ago, and was never good at it because ofcourse the classes were all software and i cond not see things happening in real. That is why I gravitated o Arduino early on. And even with the Pi, I was using Python mostly and not C, because i started with the SeedStudio Grove system.

I first tried to run the code directly as shown in the GitHub repo, and when that was successful, I thought about how to do human detection. Since my mind has been polluted to make everything more complex than it needs to be, I thought I should convert the raw data I am getting from the sensor into a thermal camera image, and then maybe I can use things like Viola Jones or even something like YOLOv5 to detect humans in the 32x32 pixel images. After all, most of the vision CNNs do downscale their images to make them more manageable. I am already running at 32x32x1 (only temperature value per pixel, not even RGB), so it should be easy to do.

After sleeping on this thought and also thinking about outsourcing the coding part to another HiWi who is actually familiar with coding, I realized there is a much better way to do this. And yes, an Indian guy thinking of outsourcing a coding job to someone else is hilarious.

|

For the engaged reader who is thinking, why does he not just use a PIR Sensor?

The hint is in the name, you idiot. |

I also briefly thought about thought about using Arduino to read the sensor values, (because I am much more comfortable in programming Arduino rather than C on the RPi), and then sending the data over serial to a computer where Processing is running that parses the data and generates the thermal camera image for me. Killing flies with a machine gun here, although it's still cool to try and replicate a thermal imaging camera which 20 years ago was basically unobtainable to normal humans.

|

Hello!

That is indeed me, waving in front of 1024 thermal radiation-sensitive pixels.

Yes, my nose was cold.

Image made using Numpy |

When you think about what the system needs to do, it only needs to identify some sort of a hotspot with the temperature of the hotspot being roughly in the region of normal human facial temperatures. If we can divide the 1024 pixel array of the sensing zone of the sensor into 2 halves, and then just look for pixel values that are in the given human face temperature range, we should be good to go.

|

| How the Sensor Senses |

But then, what if the human is nearby and its not just 1 pixel but 4 or 5 pixels that are in the the human temperature range? Well... I can probably do some sort of clustering and see if adjacent pixels are grouped together with same temperature value, its probably only 1 human still.

But then, what if there are more humans? Well... unless they are really close to each other, which would be physically awkward in an office scenario, you will get pixel or pixel clusters that are already somewhat spaced apart.

But then, How do we do the zone control? Well... If you look at the datasheet for the sensor, you will see how the sensor is reading the data and what is the the scanning zone of the sensor. Based on this, If I can mark the 16th column of the sensor matrix as the dividing line, I can then automatically get if the human pixel is to the left or to the right of the sensor. That gives me the zone detection I was looking for.

But then, how do I make sure it's a human only and not something else that's warm? What else can I do to improve this system?

Well... Even if I already implemented the temperature range limit on the pixels that are to be detected by the sensor, it is still not ideal. To get a cleaner signal, it's better to do some sort of background removal so the human hotspots are real hotspots for the sensor. So, after some thinking sessions and back and forth with Gemini, this is the general layout of the human detection that I (and Google Gemini) came up with. Allow me to share the thinking process straight from Gemini's head.

Algorithm Plan:

- Pixel-wise Baseline Temperature: Maintain a baseline temperature for each pixel (a 32x32 array of baseline temps). Update this baseline slowly over time using a smoothing factor.

- Background Subtraction: For each pixel, subtract its baseline temperature from the current temperature reading. This will give us a "differential temperature map" highlighting areas that are warmer than the recent background.

- Hotspot Thresholding: Apply a threshold to the differential temperature map. Pixels with differential temperatures above the threshold are considered "hotspots."

- Clustering (Simple Approach - Connected Components): We can use a simple connected components approach to group adjacent hotspot pixels into clusters. This helps to aggregate the signal from a human and reduce noise from isolated hot pixels. A very basic approach would be to simply count the number of hotspot pixels in the left and right halves.

- Zone Detection based on Hotspot Clusters: Count the number of hotspot pixels in the left and right halves. If the count in the left half is above a certain threshold, consider a "human detected in left zone." Similarly for the right half.

- Output and Pin Control: Maintain the pin control logic based on zone detection. Depending on the zone, trigger the appropriate pin of the Arduino to High or Low.

Advantages of Hotspot Detection with Background Removal:

- Improved Precision: More focused on local temperature anomalies (hotspots), which are more indicative of a human heat signature than just average temperatures.

- Reduced Sensitivity to Ambient Temperature Changes: Background removal helps to compensate for overall warming or cooling of the environment, making detection more robust.

- Potentially Better Left/Right Zone Discrimination: Hotspot counting in zones should provide more precise zone detection compared to averaging temperatures over the entire half.

I may have inadvertently made it sound like it was easy and instant. It took me 3-4 hours of debugging and chatting with Gemini to get to this, and more importantly, I already had a good idea of how to lead the AI to where I wanted it to go. It would take you even longer to do something like this if you have no idea what clustering is, how Arduino or other microcontrollers work, and other fundamentals. You just cannot substitute real-world experience with books. You can (apparently) substitute human-generated software with AI-generated code and get things working. Is it optimal? probably not. Did it work on the first attempt? No. Did it allow me do to something that would have been basically impossible for me to do? Yes.

I also would like to share something interesting here. Kind of unrelated, but still interesting. It is a snippet from the code creation chat (the thinking part), and I find it intriguing how the AI is self-introspecting and also gives support to its human. Like a pet.

|

| Thinking AI - Gemini Flash 2.0 |

Anyhoo, Now I have the human zone detection system working, all that needs to be done is to integrate this compute-sensor stack with the textile-based heating apparatus that we have already designed so that based on the zone where a human is detected, the heating for that particular zone is turned on by a simple relay. Fuse them both into a single product and make the world a better place.

Sadly, since this is a bonus post, you get no photos, music or movie recommendations here. Those are reserved for the main posts. But if you want anything specific to this post, the code, the research paper, or the calculations, feel free to reach out. I can't promise you anything because it is still an ongoing project. But you should try your luck.

Cheerio!

Comments

Post a Comment